For the past week I’ve been at a pretty progressive client (compared to where I came from, very sexy offices, young hipster types, no bureaucracy) basically the opposite. That in and of itself has been quite a change.

Top that, this is my first assignment and I get to do one of the coolest features in Visual Studio and Team Foundation Server 2012, Lab Management. We were not going full blown SCVMM lab management but around the idea of Standard Lab Management.

Are they significantly different? That is a yes and no kind of answer. No, because in the end it comes down to the Test Agents being installed on the machine and registering with the test controller. TFS 2012 even makes it easy enough that you don’t need to install them on each one. In turn this has made things really easy regardless of your choice in virtualization platforms.

On the other hand I say yes they are different in comparison to how much of the feature you can use. SCVMM environments become exponentially easier to debug pesky issues that seem to be the ‘No-repro’ gremlins from Production.

Alright, back to my progressive client. It’s a cool setup, they’ve recently upgraded everything to Visual Studio/Team Foundation Server 2012, ASP.NET MVC4, Database Projects (the good ones), they brought someone in to write Web Tests and CodedUI Tests, bought a good amount of supporting hardware and asked us to tie it all together and make it happen in just under a week. Success.

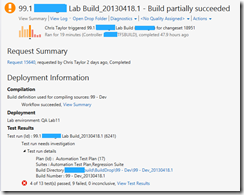

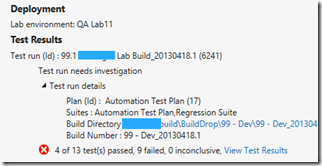

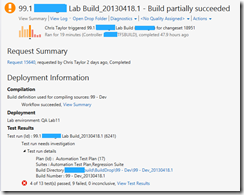

It was amazing, we were literally watching through the lab management viewer two Windows 7 Virtual Machines running a battery of coded UI tests like some person was actually there. At the end, all the information was dumped into the build detail and you could see how you were doing. It was glorious.

Ok. What does all this mean?

When I was at JPMorgan we had a number of different ways they wanted to measure what was labeled as developer efficiency. Some metrics were as simple as churn rates, some were more advanced such as operational complexity, rate of change of code to requirements, etc. In the end we would get a single number, or a couple numbers, and no one had any idea what they meant.

Let's fast forward in time again to our current implementation. This isn't your ideal environment for development but it is a commonly normal one. This company has put together a fairly new team taking over a fairly large product. People need to find ways to prove what they are doing is providing value. The double edged sword we often have is trying to fix the application and figure out how to justify the work you've been doing provides real value. We know things like unit tests and code coverage are supposed to, but we struggle to both explain it and make it look good.

What do we do instead? Work our ass off tirelessly for weeks in hopes that management notices development is doing everything they can to make a better product thus giving some mercy.

Today friends, I'm here to help you with that in hopes of giving you both some more work/home-life balance and even a little personal satisfaction. Let's take a look at some key metrics that are listed on our build report and what they tell us about progress and quality.

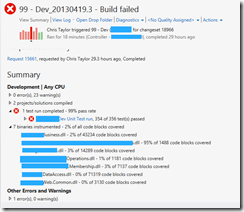

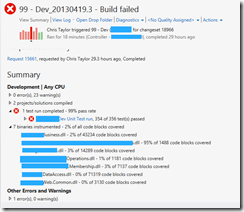

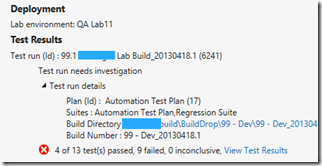

First off we will start with the Lab Build and the CodedUI tests. One thing I notice is that blog entries such as this show a lot of successes, or they show a failure and then immediate success. That's the thing, these are not 1 day stories, these are going to continue to fail for awhile. You can expect for the first few weeks to have a color-blind mans nightmare of green, yellow, and quite a spike in bug rates. This is not uncommon nor should be used as a driver for some sort of large strategic change in your development organization.

What you will find is a lot of data errors, especially in CodedUI tests. If you don't use something like a gold database that is guaranteed to be the same data each time as well as properly updated to whatever the latest schema changes are. Entity Framework and Code First do a really good job at coming up with a pretty good solution going forward, but most of us are working on already existing apps under tight budgets and enough other stressful factors to take the time to properly implement. It took us close to 2 years at JPM to do it (given it was a pretty complex set of databases) but there are a lot of factors to consider we often don't. I digress...

Does that mean they provide no value? Of course not! The data just needs to be interpreted differently right now than it will be in a few weeks. For now, it means we need to focus on the data quality of our CodedUI tests. Do we implement something to seed data each time? Do we make our data more dynamic using existing data sources (even the target database itself to determine what user names already exist)?

What we should not attempt to infer is that the application is buggy (we know it is) and our development team is doing a poor job. This data is simply exposing what the teams already would have discovered over a few months, instead the tools did it for us in a few minutes. That's a good thing, this is the beginning of our process, not the end.

Now we have a starting point to measure how good of a job we are doing at making our quality of integration tests better.

Second metric, code coverage and unit tests. I'll be honest, I wasn't really a fan of unit tests for a long time. I felt it didn't expose bugs as fast as something like integration tests could or  at least the bugs that had more customer impact than some the unit tests would find. The problem came back to selling my own value to management of how good a job I was doing. They wanted to see numbers, I had no idea how to give it to them or at least how to explain it. Enter code coverage and what it means to developer productivity and application quality.

at least the bugs that had more customer impact than some the unit tests would find. The problem came back to selling my own value to management of how good a job I was doing. They wanted to see numbers, I had no idea how to give it to them or at least how to explain it. Enter code coverage and what it means to developer productivity and application quality.

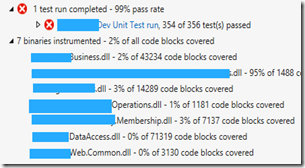

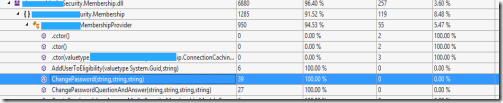

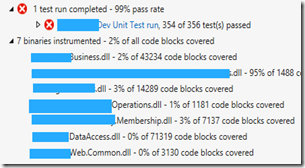

You can see on the details of this build that overall out of our passing unit tests only about 2% of the code that makes up this application is being tested. Again, early on in our process of implementing new quality controls such as more complete unit testing and integration testing we can expect to have what would be considered 'poor' metrics (like 2% coverage ;) ). It gives us the starting point. For developers it allows you to show how over the next few weeks they are increasing the amount of code tested in comparison to the entire code base.

At this point in time, it's important to not focus on bug rates. Like CodedUI tests you can expect a dramatic increase in the number of bugs discovered versus the amount of code covered. The reasons are slightly different in this case (data shouldn't really be a factor in a unit test) but again just exposes what you would have already discovered later. The other thing that it does not tell you is if the code tested fits the requirements, there are many other testing tools for that.

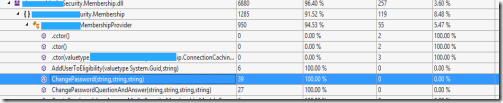

Lastly for our developers it comes down to how we make that 2% rise to 10% in the next 3 weeks. The beauty of Microsoft's Code Coverage and Analysis tools is they tell you exactly which blocks are covered, and which ones are not. (BTW, when we say blocks it could be something like code between an if {} statement, that's how granular it gets.).  If you click on View Test Results of the unit tests it will download the results to your local machine for analysis. It goes into the detail of saying exactly what namespaces, methods, and blocks are tested, and which ones are not. Double click on one of the lines and the red color indicates these uncovered blocks. This way you now know exactly what code isn't executed by any tests and can pretty easily just add new tests to cover those conditions.

If you click on View Test Results of the unit tests it will download the results to your local machine for analysis. It goes into the detail of saying exactly what namespaces, methods, and blocks are tested, and which ones are not. Double click on one of the lines and the red color indicates these uncovered blocks. This way you now know exactly what code isn't executed by any tests and can pretty easily just add new tests to cover those conditions.  The value to the developer is two fold, first you are not guessing what to write and it gives you the ability to better communicate the value you and your team are providing to management in a very clear and measurable way.

The value to the developer is two fold, first you are not guessing what to write and it gives you the ability to better communicate the value you and your team are providing to management in a very clear and measurable way.

In my next article, we will take a deeper look in some of the reports that come with TFS 2012 and how managers can use these to communicate a concise and clear message to stake holders about where development stands and what to expect in the future. These are not bad things, it allows people with a heavy (usually financially) investment help in situations early in the project (and spend more time thinking about the consequences) rather than knee jerk reactions late in the project that may have significantly more radical consequences.